Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

In this Discussion

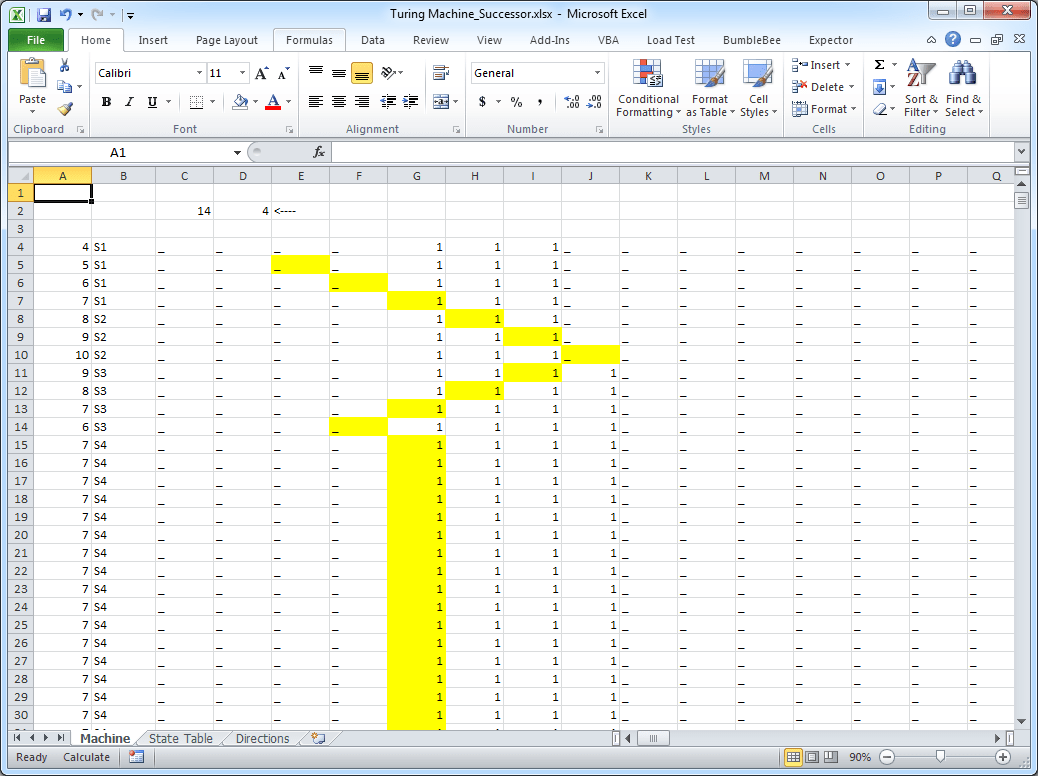

Code Critique: A Turing Machine in a Spreadsheet

Arguably, there is more programming done today in spreadsheets than any other programming language. (Or is it a programming language at all?) I'd like to kick off a discussion of how Critical Code Studie can approach the analysis of programming in spreadsheets with a look at an usual example: a Turing Machine built in a spreadsheet.

The Turing Machine is the hypothetical contraption Alan Turing described in "On Computable Numbers, with an Application to the Entscheidungs problem." One team of Netherland-based developers, including Felienne Hermans, were at a retreat when they decided to try to build on in Excel.

Object of Study: A Turing Machine built in a spreadsheet

Language: Excel

Developer: Felienne and Co.

When: 2013

Demo: The Turing Machine spreadsheet

In addition to examining this use of a spreadsheet to create a Turing Machine, I would like to open up the discussion about spreadsheet programming. First, of all, so much research depends on work done in spreadsheets. Consider this small example of spreadsheet work gone awry. However, seeing this work in spreadsheets as programming may seem counterintuitive. I recently had a conversation with UCLA's Todd Millstein, who demonstrated the conditional statements and loops that can be written into spreadsheets (and pointed me toward that previous example). But certainly, most of this work does not look like our conventional concept of "coding." The interface metaphor itself is distracting. However considering even formulas in spreadsheets as programming starts to dissolve the conceptual boundary between programming and mathematics. Perhaps the Turing Machine is a good place to start because it is self is a mathematical model/specification/thought experiment.

Also, useful is Felienne's talk:

Questions:

- How do we understand spreadsheets as programming languages? Or do we consider them more IDEs? APIs/COM Component?

- What language/methods do we need to describe the kinds of programming done in spreadsheets?

- What other examples of programs written in spreadsheets should we examine?

But specifically: What does this implementation of a Turing Machine teach us about Turing's formulation of this model?

I suspect this piece in particular might speak to @ebuswell's discussion of state in "Creative and Critical Coding. Coding as Method?"

Comments

I have a number of remarks (maybe because I have done quite a lot of research in the area of spreadsheets, see here, here, and here). To simplify the discussion, I will split my comments into different posts.

A spreadsheet is a first-order functional program: Each formula describes a computation as a function, and the references in a formula provide the arguments to the function. A spreadsheet system is thus a first-order functional programming language. Here "first-order" means that you cannot pass functions as arguments or return them as results.

The basic spreadsheet model is not Turing-complete (ignoring loops that can be found in Excel). This is what makes spreadsheets interesting from a programming language perspective, because the limitation in expressiveness together with the spatial embedding of the computation makes them amenable to a a wide range of program analyses that do not work for Turing-complete languages (for more, see Software Engineering for Spreadsheets).

I think implementing a Turing machine in spreadsheets is actually a distraction from the essence of the spreadsheet programming model, which is not Turing complete. This means that the absence of loops and recursion restrict the expressiveness of spreadsheets significantly. The simplicity of the programming model is arguably one of the reasons why spreadsheets are so widely used. (Another reason is their visual appearance, which, however, is not independent of the programming model.) A similar thing could be said about SQL and many other domain-specific languages: Their restricted expressiveness and their focus on a particular domain make them less abstract and closer to the problem domain they are designed to work for.

I suggest to look at very simple spreadsheet examples. Consider, for example, a table of numbers with a SUM formula for each row or column. To create such a table you have to copy the SUM formula from one row/column to all others. This is necessary, since you don't have a loop construct as a control structure available.

This illustrates a number of things, but most importantly the tension and trade-off between expressiveness (which requires abstraction) and concreteness (which needs redundant copies of formulas).

@erwig Thank you for those references to those articles (which I will follow up on).

@erwig Can I get you to elaborate a bit more here on why this limited version of spreadsheets makes them more interesting in terms of programming without taking into account user defined functions (with their loops, etc.)?

This may relate more to my interest in esoteric languages and their defamiliarization, but I find programming in a spreadsheet (with all of the user defined functions) interesting from the standpoint of way the visual metaphor changes (for me) the sense of where programming happens -- and as a way to open up a sense of who is doing programming when.

While thinking about this topic, I came across this post on systems that are "Accidentally Turing Complete." It mentions some systems like Minecraft and Little Big Planet, which we discussed in CCSWG12.

Lately, I've been thinking about Excel as a partial realization of the dream of the PC as a fully programmable machine-- something like Smalltalk/Squeak or the Canon Cat. In so many contexts, Excel is functionally equivalent to Filemaker, HyperCard, and Visual Basic.

The spreadsheet seems to do away with the front/back stage split of the running program and interface. Instead, the spreadsheet interface is the program and both the user and the machine can manipulate cell values. Strictly speaking, then, all spreadsheets have one loop--the event loop that updates the interface.

As a software development environment, Google Sheets is especially interesting for its collaborative features. Have there been any multiplayer games written in a Google Sheet?

You can look at simple spreadsheet (i.e. one that does not employ a loop control structure) and see all the computations that are performed at once. More specifically, by flipping back and forth between the formula and the value view, you can switch instantaneously between the program (formulas and values) and its result (only values). [Employing Frege once more, you can switch back and forth between the programs intention, or sense, and its reference.]

When you add loops, you fall back to an imperative program that requires time to execute. Specifically, in contrast to a simple spreadsheet, which always can show all values, the loop-employing spreadsheet has to go through a number of intermediate stages before arriving at the final result. This makes its meaning more difficult to understand, and it also makes it more difficult to debug.

This is closely related to why spreadsheets are so attractive visually: Only in a simple spreadsheet without loops can you see all formulas and values immediately. In contrast, a spreadsheet with loops produces many intermediate values that are never visible.

The difference of the temporal aspect also allows us to draw the following analogy: A simple spreadsheet can be visually represented by a picture (or by two pictures: one for the formula view and one for the value view showing the results), whereas a spreadsheet with loops has a movie as a visual representation.

I'd like to pursue this isomorphism between spreadsheets and other programming environments (particularly text-based ones), under the suspicion that the divide between end-users, spreadsheet users, programmers, and those who do data-entry is more convention that warranted -- or might obscure the similarities, to say it another way.

In the Week 1 Main Thread, I asked whether those who do data entry in a spreadsheet can be thought of as programming, and the feeling seemed to be that spreadsheets become programming when formulas are entered.

But, it seems to me that entering data into a spreadsheet is programming. I am assigning a variable a value. And if I make a column and populate it, I am creating an array with values. Last, my rows in a spreadsheet could be objects. For example, in my spreadsheet for addresses, each row is an object that has a name, street address, city, state, zipcode, and sometimes country.

I don't mean to be reductive, and I have to read @erwig's paper on this, where he may make these same points, but I do want to pursue this link between the two programming environments a bit further.

Yes, but assigning a value to a variable (or a bunch of values to an array) does not express an algorithm. If the values are referenced by formulas, they are inputs to the program/algorithm that the spreadsheet you are editing represents. The instant recalculation of the formulas amounts to running the program represented by the spreadsheet. So all that data entry is doing is providing input and executing a program.

There is an inherent difference between values and formulas. And this is not just the case in spreadsheets. The same is true for other languages, from arithmetic expressions to lambda calculus: Values denote themselves -- Frege might have said that the sense and reference of a value is the same. On the other hand, formulas and expressions need to be evaluated (or reduced to normal form). The process of evaluation, which we call computation, leads from an expression's sense to its reference.

A formula is an abstraction, which can represent many different computations when applied to different input values. This is not the case for values. It is an inherent property of an an algorithm that it can be executed many times with different inputs. Thus if you are entering data, you are not encoding an algorithm, and therefore you are not programming.

@erwig, thank you for taking my comments seriously here. I am trying to feel out the edges of this isomorphism and am at the same time working not to conflate programming in a spreadsheet with merely using a spreadsheet.

I do wonder though about this strict sense of algorithm here and what it preserves, especially in light of your book, which finds so many analogies.

For example, as I think of the Week 3 topic, I can think of a slave registry that involves an algorithm merely in making a table. Following the steps:

List a human that is for sale. List their value, repeat.

In other words, the process of "data entry" involves an algorithm. But again, I can see that such a claim, misses and obscures a distinction you are making between the difference between values and formulas. What is being encoded in the above example is the value not the algorithm itself.

Yes, this would be an algorithm.

Yes, it "involves" an algorithm insofar as it executes an algorithm. But it does not create an algorithm and thus does not constitute programming.

Exactly.

(I don't wan't to open another can of worms here, but if want to blur the line, you could look into programming by example, which tries to specify algorithms by just giving example values. In that context, data entry could be considered programming. It then depends on the intention behind the data entry: If it is being used as input for an algorithm, it would not be programming. However, if it is used to specify an algorithm, it would.)

Yes, that was the can I was opening by bringing up the poem generator Taroko Gorge, which e-lit artists modified, often, mostly by changing the data. I feel like I've learned a lot about programming through this method, but only when it is followed by that one further step, where I start to play with the other code. Of course, entering values is the forerunner to creating formulas in cells....

Re: can simply entering values be programming? I'm not sure how to answer this, or whether this is the most productive version of the question. On the one hand, @erwig is correct to distinguish between a value and a code. Strictly mathematically, the way I'd put it is that a value and a code are both strings of symbols, and both have interpretations, but while the interpretation of a code involves the creation of a new string of symbols, the interpretation of a value does not. What becomes apparent in this wording, is that it is not the string of symbols that makes the distinction, but the interpretation. In the context of a computer, all it takes is a misplaced jmp instruction to turn a value into a code. Likely the program will crash, but it's not theoretically impossible for this accident to create a better program.

All of this being said, there is maybe no data without algorithm, and it makes sense to think them together. Even in the pre-mechanization era, records are often kept for procedural purposes. After mechanization but before code, data is created in very very specific forms in order to lower its processing requirements, and changes in the way data is formed allow for more efficient processing. For example, recording deposits on a slip instead of in a bound daybook allows for multiple clerks to simultaneously record transactions with the same account. Having a ledger which allows for reorganization of the pages greatly improves the time it takes to search for a particular account. Now, if we wish, we can rigidly go through each of these things and distinguish process from data. But I think the question of what exactly is and isn't programming is harder, especially when we think about the complex labor systems that make these algorithms possible. Was it programming to invent the ledger with removable pages, or is it only programming to incorporate that ledger into a particular banking system? Is it programming to draw a header at the top of a ledger book and line the pages? It is programming to enter values into the header at the top of a ledger book? I think these examples of pre-computer algorithms are useful for these questions, as we don't fall into the trap of erroneously thinking that everything we do is data, everything the computer does is algorithm.

Re: Turing completeness. I'm wondering whether being a universal (rather than a particular) Turing machine is really necessary anywhere. I don't know the answer to this mathematically. What Turing proved was that a universal Turing machine could be constructed that could make any calculation that a particular algorithm could make. The converse does not immediately follow. That is, it's not clear that there are particular algorithms—with the exception of the "be a universal Turing machine" algorithm—that require a universal Turing machine in order to be executed.

I'm continuing to think about how spreadsheets seem to join code and data in ways that other programming languages don't, but I don't know what to do with that yet.