Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

In this Discussion

Code and computer's creativity? hmmm...

Dear Working Group,

the idea that a series of lines of code alone can give rise to creative behaviour and thus generate artworks whose sole author could be said to be the machine is rising… and faster than ever before.

I strongly opposed these views (but I welcome different opinions of course). AI and art are not a good match under the premises above but I do not exclude that other ways of seeing this relation may exist and provide interesting results.

Recently, what I have engaged in is an exploration of the limits of computer code (and AI methods) for artistic purposes.

I hope you do not mind if I start a thread with the following 10 lines of code which inform a work of mine from 2014 titled “AI Prison” (I do this only as a way to start a broader conversation...see section "Questions" below).

#include <iostream>

int main()

{

int i;

while(i == 0) {

std::cout << i ;

}

std::cout << "RUN!" << std::endl;

return 0;

}

Explanation

AI Prison is artwork that engages, seriously and humorously, with ontological and philosophical issues surrounding AI. In that respect the work’s premises pertains to the possibility, or better impossibility, for a system to independently self-organize and self-evolve its own physical memory paths, let alone to be intelligent, conscious or creative. Trapped by a series of human-built ‘chains’ (virtual memory, memory management units, os etc.), computers’ softwares are ‘locked’ within themselves and forbidden the possibility to evolve and act intentionally. With these ‘chains’ in place, it does not count the complexity of the computational methods deployed (see any AI technical literature in that regard) since the chains are beyond the control of the program itself and unbreakable by it (or overlooked by the programmer).

Thus, simplicity will suffice here.

Description of how the code operates:

The aesthetics are generated by the result of a C++ program that repeatedly test for evidence of intentionality. Evidences that, so far, have been quite predictably refuted. Because “i” is not initialised, VM and MMU (controlled by the OS and not the program) are zeroing the memory allocated to the program for us and automatically. Thus an endless stream of zeros are printed to the terminal. Note that in Java, for example, not initialising variables will produce an error and fail to compile.

Can then AI break chains without even being aware of their existence? Well, if it does...RUN!!!...the software has done something it wasn't designed for (AI dystopia)

Commentary

The piece is of course ironic and it serves as a defense against a spreading epistemological positivism that is always more influencing media/digital arts.

Whether we believe or not that science can one day provide us with computers that can mimic human behaviour, we, as people engaging in art practises, should be, probably more than anyone else, very careful in the dealing of such topic especially when ideas such as artificial intelligence (AI) and creativity or consciousness are presented in the same context. Beyond sci-fi plots, the concerned scientific and aesthetic literature too often offers text in which computer programs generating art are addressed as creative entities. This is, in my opinion, a cultural trap caused by a wealth of concomitant factors, from sociological, psychological, historical to philosophical and all more or less connected to long lasting tradition of positivist attitude in relation to knowledge.

It is not my intention to re-iterate here the many historical and philosophical arguments presented in favour and against the feasibility of intelligent machines. Nor it is my intention to suggest that much of the work done in artificial intelligence and art is oblivious of such literature. Nor that many of these works are built from a genuine belief in computer’s ability to think creatively or helping us to dream of a distant future. If anything, my intention is instead to reflect on the limitations that our reality, in fact a heavily technologically mediated reality, imposes on our dreams.

Questions

Where, and how, does code' syntax start becoming, or it is forbidden to become, and intentional act of artistic expression (semantic?) for the computer? (or for the human if you wish... an equally interesting question)

I hope you will welcome this proposal.

Looking forward to hear form you.

Kind Regards

Giuseppe Torre

Details of the work

A video of its first prototype is available here:

The work has been discussed, briefly, in the following article:

Torre, G. (2017), `Expectations versus Reality of Artificial Intelligence: using Art to Reflect on and Discuss some of the Ontological Issues.', Leonardo Art Journal - MIT.

The artwork is now part of the permanent collection of the BLITZ Contemporary Art Gallery in Malta

Comments

Thanks for sharing this example @gtorre. But let me act like a human and not answer your question.

I feel like this piece can be read in a manner totally opposite (and in many other ways), for you are telling a sort of joke about the limitations of machines by programming your virtual car to endlessly run into a wall and are drawing from that a kind of parable of the limitations of machines.

But the fact that you are telling this joke in this way seems to express a greater anxiety.

I think that Godel, Escher, Bach might be useful in unpacking this program in which the program cannot terminate itself or realize that it needs to terminate.

BTW, for those wanting an online compiler for C++, check here.

To run the code online (briefly, within a strict output limitation):

https://www.jdoodle.com/online-compiler-c++#&togetherjs=oMIMnd2zj7

@gtorre -- I'm interested in the word "intentional" in your 2014 piece description and in your question. For example, say that a particular computer was left running for a long time, and due to aging material substrate -- RAM error / motherboard failure / network packet error from a cloud-hosted or virtual machine, cetera -- one of the "voltage differences" ended up generating a 1, breaking the while loop and printing to the screen. This could happen.

If it did, would that meet your criteria for intentionality?

Anticipating the creative and critical coding week, I'd encourage our participants to explore this code by modifying it, writing your own versions of it!

For me this work raises a different and related question--and maybe one that ties together all 3 weeks of this workshop: Is it a coincidence that there is a move to see machines as creative in a period in which there is increased recognition that women and people of color are creative? It reminds me of the canon wars--as literature other than white men becomes canonized is the moment when you see an attack on the very idea of the canon.

I want to create my own but I did not see the command prompt or terminal there on the website. Could help help me?

See my version sir Mark Marino

Thank you for your comments

yes and no. The case in which the program generates something other than 0 is indeed possible as you correctly point out. It is a really remote possibility. I cannot tell the exact mathematical probability for its occurrence but it is impossible to exclude its existence. The most probable way of giving us a non-zero value is peraphs at the first cycle/run when memory is allocated to the program. In this case, and for some unpredictable reasons, the MMU and VM fail to zero the address spaces (or find them) to be allocated to the program and providing an already-filled memory space (likely anything but zero). I am sure that those who are able to code at machine code level can offer more examples on how to exploit other possibilities and it would be really interesting to hear.

Let's assume for a moment that a non-zero value is returned.

Is that a sign of intentionality? My view is that rather than a sign of intentionality in itself, this occurence represents a break-free (the "prison" indeed) that could potentially (not necessarily) lead to the evolution of an entity that through natural selection can eventually develop intentionality.

In other words, it would be as if the "virtual" software, normally locked in itself and bounded to simulation, leaked into the physical word (through the managment of of its own memory allocation). By entering the physical word it has now an opportunity to participate in the real/natural (not digitally simulated) process of evolution. For this, we would need multiple computers running the same code discussed and communicating between them. Then, intervene on them by forcing the break-free (through voltage difference for example) and see what happens. Let's not forget though that the software should also "decide" to not exit itself once a non-zero is returned.

OK, I have gone too far, apologies. Nothing would happen this way for probably billion of years or never.

In short, it seems that the demiurgic powers (in the Platonic sense) we wish to attribute to ourselves through programming something (AI) lock us in a spiral of will to account for everything. However, as the code shows, not matter how sophisticated AI algorithms we devise we will be always behind ourselves (in our case we have delegated the decision for the value of "i" as implicitly allowed in C++)

In that sense code complexity is superflous and hence the simplicity of the code proposed. To paraphase Searle talking to the Google AI team (needless to say how opposed their views were), there is indeed no reason to assume, nor proof of it, that more syntax (i.e. code) give rise to semantic.

why/how then the attempt of simulating intentionality as implied by AI software developed to to make art (intended as an intentional act) should equal human(real) intentional acts?

One would end up questioning the entire raison d'etre of art and code at this pace...but hey! why not

That is really interesting. I never thought of them in relation to this work. Could you share some links?

I was referring to some of the ideas that come out of Gödel, Escher, Bach: An Eternal Golden Braid by Douglas Hofstadter. He wrestles with the complexities of systems that cannot realize that won't terminate in that book:

and earlier:

Here, he is discussing the potential for computers to be programmed in such a way that they recognize when their operations (searches, calculations, etc.) will never terminate.

I wonder how much of this idea of the intention (purpose, goal, aim) relies on simplicity vs. complexity.

Example 1: AI Prison gives a while loop a 0; it gave the program everything it has; we know (barring bizarre events) why it chooses zero, because that is the only choice we gave it. There is no choice but ours, we who set the initial conditions that determine the outcome, and we are able to predict that outcome before it happens.

Example 2: Netflix gives has 100,480,507 ratings that 480,189 users gave to 17,770 films (the NetFlix Prize). It chooses the BigChaos machine learning algorithm, and the algorithm recommends that I watch the film "Apollo 13." In this case we also gave the program everything it has, and we know in some sense why it chose what it did, and yet we also do not know -- we had no idea what film the machine would recommend until it did so, and the logic of the resulting multidimensional model is in part a black box to us. Even when we examine many parts of that model in detail it is difficult to describe what it is or what it does as a specific process or plan using narrative language -- the math is relatively simple, the data is all at hand, but the model is in part an alien artifact, like (and yet utterly unlike) a mind.

I shamelessly admit that I wasn't aware of this literature milestone!

I am reading on it and watching a lecture series too https://ocw.mit.edu/high-school/humanities-and-social-sciences/godel-escher-bach/index.htm

wow, thanks for pointing this out!

Yes I agree it could be useful and fun too to unpack the code through it!

@jeremydouglass

In relation to your Example 1, allow me to point out that, technically, nor the program nor the programmer makes the choice to give 0 to "i". In fact the variable is declared but not instatiated (something that cannot be done in Java for example). The value 0 is given instead by a whole series of decisions made by those who programmed OS, VM, MMU and so on down that path to the CPU. In short, all the back-end work required to compile and run the program.

As for Example 2, I agree: the model is in part an alien arifact. Much of AI algorithms work on these basis indeed. We have an input, a sought target and what in between is a sort of black box.

While intelligence can be seen in the outcome of the program, I think it is this air of mistery in the middle processes that foment ideas of consciousness, intentionalities, creativity etc.

@gtorre: re:

That is a very good point. Default initialization (or implicit initialization) such as

int=0,boolean=false,object=None / NULLet cetera is common in programming, but it may not come for free, and declaring variables that must be implicitly initialized is not allowed in some programming languages.I think I understand what you are saying about not ascribing creativity, agency, authorship, or choice to a black box. It was helpful that you referenced to Searle (and the Chinese Room). I wonder if this means that you are agreeing with Searle's basic position on AI when you consider computer creativity. Is it the case that creativity is Strong AI, and Strong AI is by definition impossible for a computational algorithmic system? Would any conceivable code fail, by virtual of being code?

I think this is a key question in what we label as creativity, machine or otherwise. What we talk about as machine creativity is often based on the idea that the machine can find connections that humans don't–or, alternatively, that the machine tries and fails to find connections that humans understand†. In both of those cases the moment of surprise that we discuss as creativity is the result of putting input through a frame shift and comparing the two outputs.

Humans do the same thing with each other, of course: creativity is subjective and two people with shared backgrounds and experiences will be less likely to find each others' work broadly creative than two people coming from different perspectives‡.

But this line of thinking raises an odd question in my mind: if two humans with different perspectives can find one another creative, can two algorithms find each other creative? Though maybe it's not quite as odd a question as it seems at first.

Thought experiment: You have two convolutional neural networks that you want to identify objects in a corpus of videos. They've been trained on different datasets, but both work in generally the same way: they analyze a frame of video, identify an what they think is an object, assign a confidence level to the relationship between the identified object and a concept/word it already knows, and output the word, confidence level, and time/geo codes for the object.

If you were then to feed the output from one into the other as more training data, would the second find the first one "creative" when results don't match up? In that case you could even quantify creativity by measuring divergence.

Or, put more simply, do we have to define creativity relative to human experience?

† Including connections to random or pseudorandom processes, particularly generative art, etc.

‡ I'd say this generally, but creativity also relies on shared experience, and there is creativity that requires specific domain knowledge, etc., etc. My point is that some kind of different perspective is a necessary condition, not sufficient.

Correct, that is what I think and what I tried to put into the ten lines of code.

In addition, while I agree with Searle and I appreciate the logic (and simplicity) of his argument against strong-AI, my real views side with phenomenology (and what more anti-positivist than it?).

In constrast with @belljo , my opinion is that simplicity suffices because no matter how perfectible any algorithm is or will be in imitating us, "humanity is not produced by the effect of our articulation or by the way our eyes are implated in us" (Merleau-Ponty, Eye and Mind, p.125 https://pg2009.files.wordpress.com/2009/05/eye-and-mind-merleu-pontymmp-text1.pdf ) .

and:

This is quite similar to asking the question: Can we define an algorithm that could decide whether or not algorithms terminate? The answer is "no." It follows that algorithms cannot "know" whether they themselves terminate. This is the famous Halting Problem that was conceived In 1936 by Alan Turing as an example of an undecidable problem.

If you want to get a sense of how an algorithm "might feel" to not know about its own termination, watch the movie Groundhog Day in which the protagonist Phil Conners relives the same day over and over again and tries to figure out how to escape from this endlessly repeating loop. (BTW, I use the movie Groundhog Day in my book Once Upon an Algorithm: How Stories Explain Computing to explain the Halting Problem and why it is unsolvable.)

One thought on that "definition" is models of legal definition, such as (for example) the requirements of US Patent law, which include both "novelty" and, perhaps more interestingly, the "nonobvious" patentability requirement of 35 U.S.C. 103

...as interpreted in, e.g., Graham v. John Deere and in KSR v. Teleflex.

So if there are known methods within a domain, and you apply them to a new problem with predictable results, your result may be novel... but it is obvious (not creative). By analogy, if a computer has a bunch of known algorithms with known results, and it applies them, it will never have been considered creative. The machine can apply a combination of Photoshop filters to an image, in any order, but the effects would have been obvious to a person having ordinary skill in the art.

The interesting thing about these legal tests is that they imply that a patent-application writing computer program might not have that difficult a time meeting this particular standard of the patent office. It would simply need to output a proposed solution to a problem in a way that "a person having ordinary skill in the art" would find surprising. That's actually very easy to do for simple systems in some domains. A simple example might be sodarace: when asked to build very simple walking machines out of joints, rods, and springs, a genetic algorithm produces some counterintuitive and fantastical looking spring-creatures which use crazy methods of traversal, yet may nevertheless be faster than the carefully wrought design and "intuitive" or "obvious" design strategy of a human competitor -- who attempts to build a person, a horse, or a caterpillar out of sticks... and loses the race to a shaking triangle with hairy corners.

@erwig yes, some similarities with the Halting Problem may be there although, of course, the code presented here does not pretend to offer any logical proof for it but rather hoping to ignite a discussion on that strange marriage between AI and computer's creativity.

The strangest thing for me in this "marriage" is that, by necessitating a reframing of the term creativity, it reduces it to some mechanical way for achiving novelty (I like the way this seems also evident in legal domain @jeremydouglass ) which is a highly reductive way of describing art and the creative processes underlining it.

This line of thought is similar to when discussing thinking/consciousness in computers, case in which thinking is reduced to calculus.

Somehow I feel that this renewed interest of the AI field for the arts is a way of cutting short the quest/proof of intelligence as to say:"look! the computer is creative and what more human than making art?! There you go then, computers are intellgent, can feel, can think etc."

Or, alternatively, it may mean that artists got bored of themselves or their practice

In thinking about our Week 2 conversation, I was reflecting on the nature of porting and what that has to offer our discussion, but perhaps we could think about 'bad translation' as well. For example, the following program will do what @gtorre's program does:

Which does the same thing as your program, except it doesn't. This is a purposefully bad port. It produces the same output as your program, yet it's fundamentally different. (Although there's always creative misreading, too.)

In making that port, I had to ask myself not just "What is this program's output?" but "What does it mean?" Or rather how does its shape determine its meaning. What in the original language allows "AI Prison" to mean what it does? Could this other language express the same idea? How does one implementation capture the spirit of the program more than another, the intention as explained by the artist, or the meaning that we assign to it? What affordances would I have to tap into for the same effect? (And of course, it could never be the exactly the same.)

I think that this exercise, an experiment in translation, has a lot to say for what the experience of porting adds to the praxis of CCS.

This is interesting because I would actually argue the opposite. When faced with very different forms of creativity than what one has been exposed to, people often don't see it as creative. Examples that come to mind out of my research include forms of repetition with difference or variations on a theme that are common in African Diaspora creativity but seen as uncreative under a Western Romantic author model. Or communally-owned creativity like women's craft traditions or indigenous traditions that don't "count" because of how we think about authorship. So then much would depend on what assumptions got built in to any machine trying to assess creativity (which I hope to attempt someday).

Yes, I agree with that; it's part of what I meant by creativity also relying on shared experience. Without that shared experience most people won't really know where to categorize what they're seeing. It seems like creativity occupies a sweet spot between familiar and alien. To extend the machine creativity thought with an extreme example, an algorithm trained to identify objects wouldn't have any means to evaluate DeepDream's creativity.

I'd find it interesting to compare the results of the same machine learning algorithm that was trained on material coming from two different cultures, and then compare those results to using the same set of training material to train two algorithms written by programmers coming from different cultural traditions. Is that the machine version of nature vs. nurture? I don't know; I'm going to assume someone has done something similar to this though. I'll look around a bit.

and:

This seems to be related to the difference between sense and reference (as identified by by Gottlob Frege). Both programs have the same reference (they have the same semantics/behavior), but their sense might be different.

Except, if their reference is the code they produce, they may or may not have the same reference. In this case, any modern C++ compiler will reduce this thread's code to an assembly language version of Mark's badly ported code. The compiler will also produce a warning for a variable used uninitialized, and for unreachable code, but let's assume we don't care about that. If you tell the compiler to avoid optimization, the code it will produce will be something like "set [location] zero; loop: branch to end if [location] is zero; stash parameters for printing [location] and call the printf routine; branch to loop; end: stash parameters for printing "RUN!" and call the printf routine."

Since the latter uses branch prediction, and the former doesn't, if I run something on another thread, the cache will be in different states depending on which of the two programs is run.

Also, suppose I run these two versions on an operating system in which some joker made a routine that periodically gets a CRC of the whole memory and auto-crashes it when it's equal to his favorite number, "42." Maybe one program crashes the computer and the other doesn't.

Also, suppose this is an embedded system. And suppose a horde of AIs who have taken to physical embodiment find this poor old imprisoned AI, and then they install some hardware to run a DMA attack in order to get him out. In the while() version, they need to have their attack change the writable portion of the memory, and so they succeed. In the goto version, the only way out is to change the code, which is in another portion of memory and isn't writable via DMA. So the difference between these versions can be the difference between escape and prison.

Anyway, I'm skeptical of Frege. Language always has a material aspect that doesn't cease to matter when it participates in a semantic system. That material seeps into sense, and sense seeps into reference.

@markcmarino

Absolutely! Indeed, the output of the program is probably the least important element serving more than anything the purpose of letting its audience gazing at an infinite stream of zeros.

Beyond (or beneath) the loop itself instead, it is the statement “int i;”, in my mind, to “take the stage” in that it points to axioms of the language (i.e. the fact that in C++ any “int” is implicitly set to 0 and that initialisation is not required). And axioms as such are something established, accepted; something we do not need to think about. Well, I argue instead, we do because by doing so we confront ourselves with the constraints (logical, real, interpretative, etc.) of the system we use and speculate/reason on what it affords, or not, for us.

In our case, the above can also be read as a way to say that often we think of coding in a specific language as method to speak directly the machine language, forgetting though that code is an abstraction separated by many layers (abstract too) from the physical/real layer of the hardware (VM, MMU, OS, etc).

In doing so, these multiple layers of abstraction, in my opinion, offer fertile discussion for interpretative efforts. We look inward rather than outward (the output).

P.S. I am going through the non-eleatic version of the program you offer in @ebuswell thread. Really interesting! (I will reply on that thread)

@erwig Re: Frege’s sense and reference. I am not familiar with. I will attempt a read although I must admit I still struggle with logical philosophy.. .. (oh just read @ebuswell reply. I will need definitely more time to understand and meaningfully answer to this)

.. (oh just read @ebuswell reply. I will need definitely more time to understand and meaningfully answer to this)

@melstanfil and @belljo:

Cultural norms play undoubtedly an important role in judging creativity. Yet even within western culture no agreement has ever been achieved (which is also good frankly). I liked the quote “creativity occupies a sweet spot between familiar and alien”. I see art as a grey zone too, in a peril balance in between extremes if you wish. But then I would ask how good are binary systems in defining those grey areas? Statistical analysis is too dependant on inputs which is difficult to agree upon…”probable” should be somehow substitute with “maybe/hmm/quite there/etc”, but how would you code that?

Great analogy. Could I get you play out who you see it as applying and, if you would, any ways you think Frege's explanation doesn't quite apply?

I think the difference between sense and reference is analogous to the difference between an algorithm and the result it computes. In the running example of this thread, you can compute an infinite list of zeros using a while loop, a goto statement, or recursion. These are (slightly) different ways of achieving the same effect. As another example consider sorting a list of numbers. You can do this using selection sort (which works by repeatedly finding the smallest number in an unsorted collection) or mergesort (which splits a collection, recursively sorts the two smaller collections, and then merges the sorted results). These are two very different algorithms that also have markedly different runtime complexities, which reflects their different senses (or meanings). But when applied to a collection, they both produce the same result. In this case the reference is the function computed by executing the algorithms, which is the same.

Another way of looking at this is to view the operational semantics of a program, which amounts to a trace of intermediate results or machine states, as the sense/meaning of a program, and the denotational semantics, wich is the computed function, as the reference.

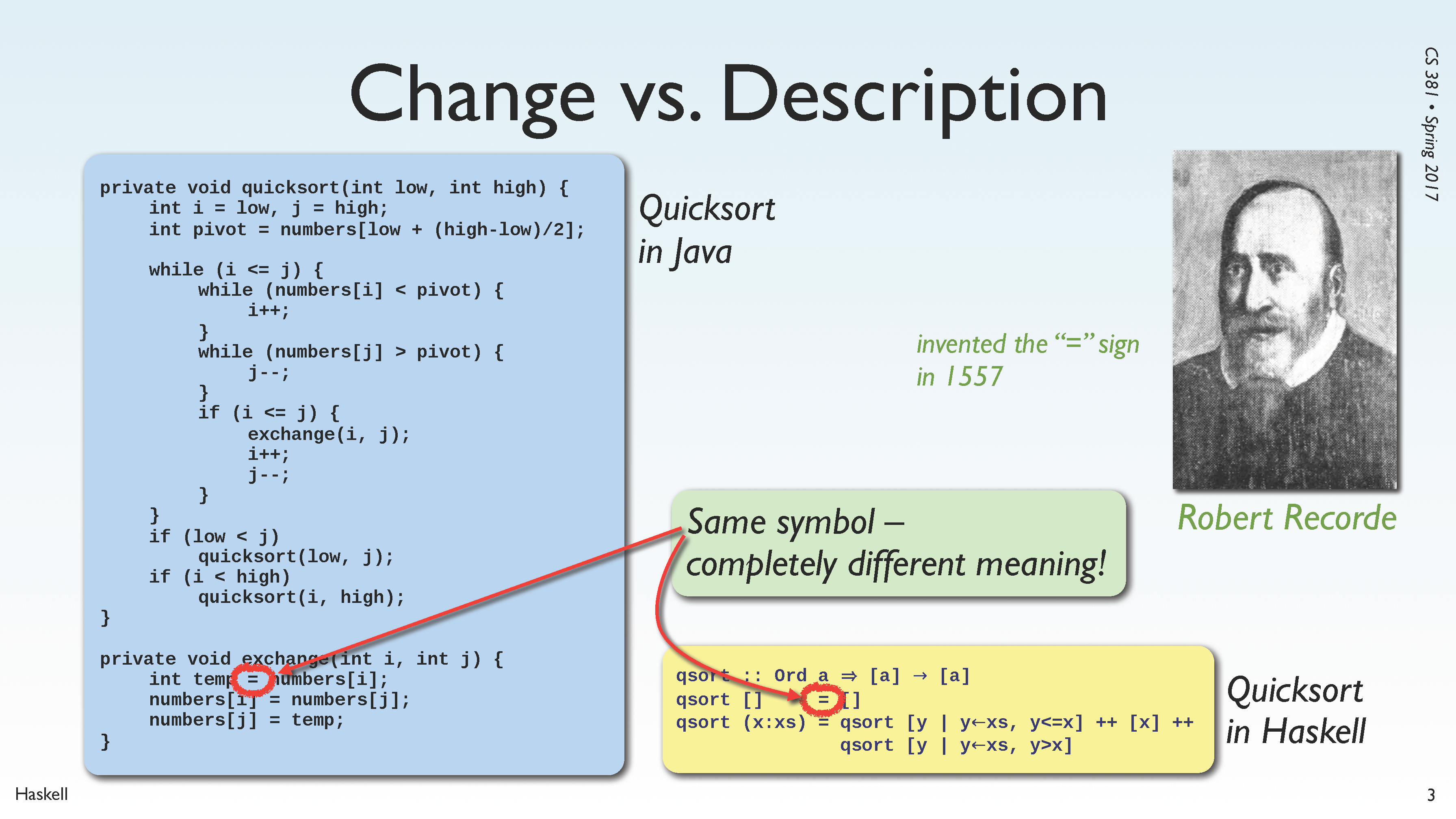

Note that this view considers two different programs in the same language. We can also consider the flip side and look at the same program expressed in two different languages. This situation is more complicated, since the differences in languages may or may not in any individual case lead to a difference in sense. As one example, you may want to take a look at this slide, which shows Quicksort as it is typically implemented in Java and Haskell. The sense of these two implementations of the same algorithm is very different.

Thanks @erwig for your clear explanation. The picture you offer clarifies the idea greatly (thanks!).

If the object of the analysis is the algorithm and its output then your interpretation of the code through Frege’s sense and reference is offering some insight.

Under these premises, it is true that the same output can be achieved using many (if not all) other programming languages (e.g. 10 PRINT "0";: GOTO 10 ).

No objections to this. I guess what interests you, from this point, is an analysis of how different syntaxes achieve the same results. I see something in this.

Yet, in addressing @markcmarino ‘s question ....

… I think we have to look away from the pair algorithm - result. (although admittedly, I would love to play around with a DMA attack to see its output changing ( @ebuswell )

In my mind the “spirit of the program” is not in the loop but the “int i;” statement because pointing to implicit assumptions (or programmatic axioms) that that specific language, C++, inherited.

Thus, the work and its code instead of pointing to the outcomes/results/output (i.e. the stream of zeroes) attempt at pointing inwards into the assumptions of the specific language. It does so in order to highlight the distance between the virtual/abstract/artificious domain of software and reality (the stream of electrons that make up a computer if you wish).

Software is imprisoned, locked in itself. All about creativity in machines is just speculation, or a dream of omnipotence.

But there is more. If we were to analyze syntax in a sort of comparative study across programming languages I would use a Heideggerian starting point: we do not speaks language; language speaks us. (I am paraphrasing him the real quote is : “Man speaks only as he responds to language. Language speaks. Its speaking speaks for us in what has been spoken:” )

..and this maybe mediates between a fregian analytical approach and the material aspect of language mentioned by @ebuswell.

@erwig: nice example of comparing the uses of

=in Java and in Haskell.Although I am not fluent in Haskell, this is my understanding of the contrast:

=is the assignment operator -- "make it so" -- and that common use traces its roots back to just before FORTRAN.=is a definition operator -- "a rose is a rose" asserting that these are equivalent, and as such may be substituted for one another. This common use in mathematics may be traced back over four centuries.So we can articulate the differences in code languages, code language families, and their paradigms (e.g. imperative, functional) -- and we can also consider those differences in light of the histories that inform those paradigms.

Having read @jeremydouglass response I noticed an error in my previous response where I said:

More correctly I should have said:

How from an analysis of identical signs and/or a combination of them (e.g.

=) we can evolve different syntactical properties and yet yielding same results.